LLM optimization for AIOps

Test and deploy the most optimal LLM model for performance, cost and security.

Test and deploy the most optimal LLM model for performance, cost and security.

Sodales tempor sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae purus an ipsum suscipit

Magna dolor luctus at egestas sapien

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis ultrice auctor congue varius magnis

Volute turpis dolores and sagittis congue

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis sodales magna

Tempor sapien quaerat an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam congue

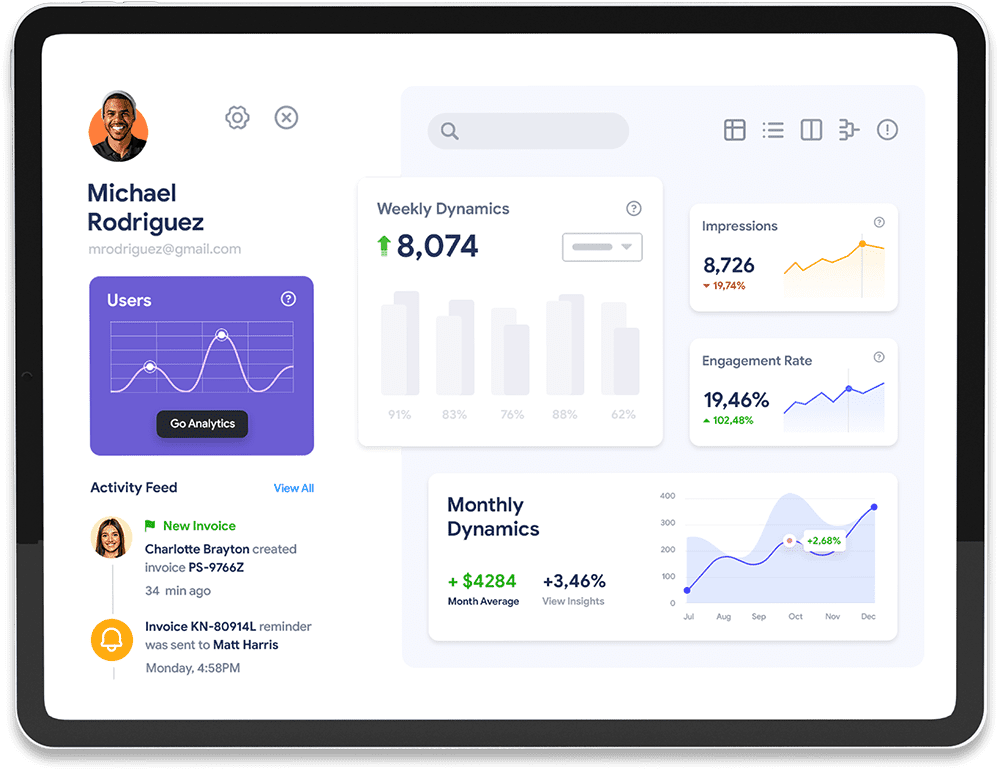

Run your LLM on the Cast AI platform to boost resource utilization and reduce cost.

The Cast router optimizes your queries to maximize free credits from providers.

Set a preferred model order to ensure your queries always use the LLMs you prioritize.

Sodales tempor sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae purus an ipsum suscipit

Sapien quaerat tempor an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam undo congue cursus dolor

Purus suscipit cursus vitae cubilia magnis volute egestas vitae sapien turpis ultrice auctor congue magna placerat

Sodales tempor sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae purus an ipsum suscipit

Tempor sapien sodales quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus an ipsum vitae suscipit purus

Tempor sapien quaerat an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam undo congue dolor cursus

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis ultrice auctor congue magna placerat

Make the best choice for your LLM application with all cost insights in one place.

Automatically route your requests to the most optimal LLM, balancing performance, cost, and provider limits

Deploy and manage AI models directly in your Kubernetes clusters and join organizations already running secure, self-hosted AI workloads with Cloudtuner.AI.

Quaerat sodales sapien blandit purus primis purus ipsum cubilia laoreet augue luctus and magna dolor luctus egestas an ipsum sapien primis vitae volute and magna turpis

Quaerat sodales sapien blandit purus primis purus ipsum cubilia laoreet augue luctus and magna dolor luctus egestas an ipsum sapien primis vitae volute and magna turpis

Quaerat sodales sapien blandit purus primis purus ipsum cubilia laoreet augue luctus and magna dolor luctus egestas an ipsum sapien primis vitae volute and magna turpis

Ligula risus auctor tempus magna feugiat lacinia.

Aliquam a augue suscipit luctus diam neque purus ipsum neque and dolor primis libero

Aliquam a augue suscipit luctus diam neque purus ipsum neque and dolor primis libero

Ligula risus auctor tempus magna feugiat lacinia.

Ligula risus auctor tempus magna feugiat lacinia.

Share findings

Share findings

Share findings

Share findings

Import data

Share findings

Import data

Share findings

Import data

Ligula risus auctor tempus magna feugiat lacinia.

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

Internet Surfer

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

@marisol19

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

Web Developer

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

App Developer