Kubernetes cost monitoring

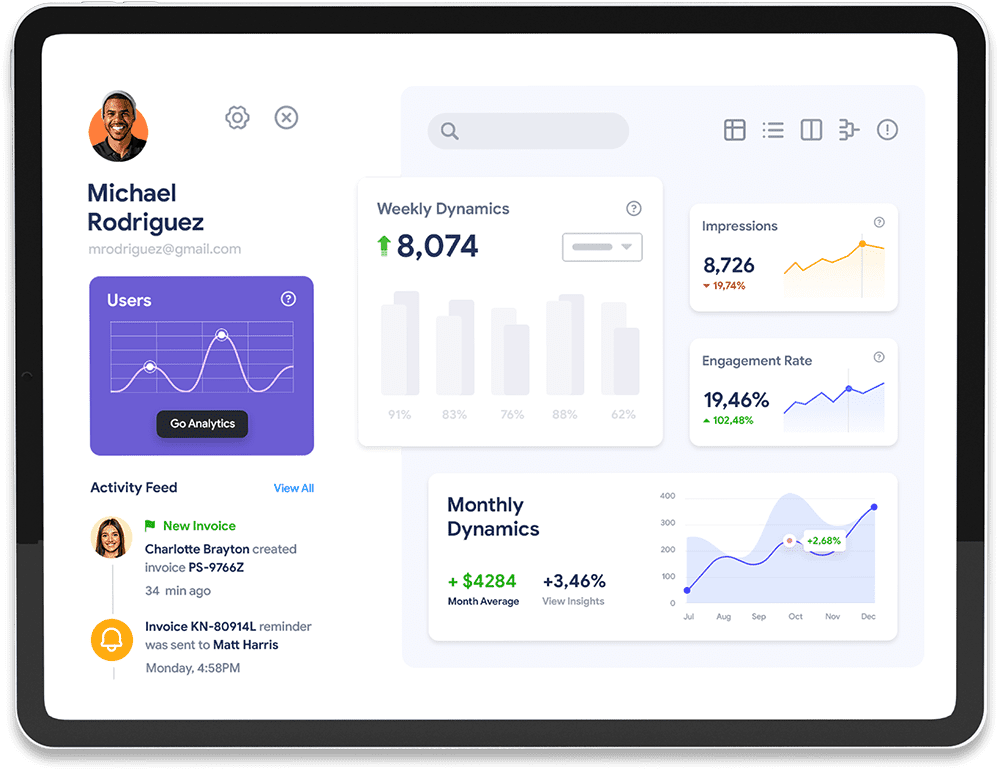

Manage your Kubernetes expenses for free with detailed real-time tracking down to each namespace, workload, and resource allocation group.

Manage your Kubernetes expenses for free with detailed real-time tracking down to each namespace, workload, and resource allocation group.

Porta justo integer and velna vitae auctor

Ligula magna suscipit vitae and rutrum

Sagittis congue augue egestas an egestas

Transform your Kubernetes management with real-time visibility into resource utilization across your clusters

Analyze your Kubernetes spending with detailed breakdowns across workloads, namespaces, and allocation groups

Discover how much you could save with with automation

Sodales tempor sapien quaerat congue eget ipsum laoreet turpis neque auctor vitae eros dolor luctus placerat magna ligula cursus and purus pretium

Sapien tempor sodales quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae

Sodales tempor sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae purus an ipsum suscipit

Tempor sapien sodales quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus an ipsum vitae suscipit purus

Tempor sapien quaerat an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam undo congue dolor cursus

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis ultrice auctor congue magna placerat

Get insights into your network traffic and costs across all cluster levels, including cross-AZ traffic.

Receive actionable alerts every time your cluster experiences an anomalous cost change.

Create dynamic workload groups aligned with your team or virtual modules, and monitor costs over time.

Understand your levels of resource utilization over time and get insights into your efficiency.

View compute cost details for all your organization’s clusters in one place.

Integrate with your existing monitoring systems and visualize data using tools like Grafana.

Sodales tempor sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae purus an ipsum suscipit

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis sodales magna undo aoreet primis

Tempor sapien quaerat an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam undo congue dolor cursus purus congue and ipsum purus sapien a blandit

Ligula risus auctor tempus magna feugiat lacinia.

Sodales tempor sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat

Tempor sapien quaerat an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam undo congue dolor cursus

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis ultrice auctor congue magna placerat

Tempor sodales sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae purus an ipsum suscipit

Nemo ipsam egestas volute turpis egestas ipsum and purus sapien ultrice an aliquam quaerat ipsum augue turpis sapien cursus congue magna purus quaerat at ligula purus egestas magna cursus undo varius purus magnis sapien quaerat

Ligula risus auctor tempus magna feugiat lacinia.

Sodales tempor sapien quaerat ipsum undo congue laoreet turpis neque auctor turpis vitae dolor luctus placerat magna and ligula cursus purus vitae purus an ipsum suscipit

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis sodales magna

Tempor sapien quaerat an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam congue

Quaerat sodales sapien blandit purus primis purus ipsum cubilia laoreet augue luctus and magna dolor luctus egestas an ipsum sapien primis vitae volute and magna turpis

Quaerat sodales sapien blandit purus primis purus ipsum cubilia laoreet augue luctus and magna dolor luctus egestas an ipsum sapien primis vitae volute and magna turpis

Quaerat sodales sapien blandit purus primis purus ipsum cubilia laoreet augue luctus and magna dolor luctus egestas an ipsum sapien primis vitae volute and magna turpis

Ligula risus auctor tempus magna feugiat lacinia.

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

Internet Surfer

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

@marisol19

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

Web Developer

Augue at vitae purus tempus egestas volutpat augue undo cubilia laoreet magna suscipit luctus dolor blandit at purus tempus feugiat impedit

App Developer

Kubernetes cost monitoring is all about tracking the resource utilization and costs associated with running applications in a Kubernetes environment. It’s key for optimizing cloud spend and ensuring efficient resource allocation.

You can monitor costs in a K8s cluster by using tools that track resource consumption (like CPU, memory, and storage) and correlate them with cloud billing data, providing visibility into costs across namespaces, workloads, and allocation groups.

Key metrics include CPU and memory utilization (ideally including the gap between provisioned and used resources), storage costs, network costs, and costs per namespace, workload, or allocation group.

Common K8s cost monitoring challenges include visibility into shared resources, complex cloud billing structures, and ability to track utilization across multi-cloud setups. Using comprehensive monitoring tools and setting up cost allocation policies help to address these challenges.

Cursus purus suscipit vitae cubilia magnis volute egestas vitae sapien turpis sodales magna Tempor sapien quaerat an ipsum laoreet purus and sapien dolor an ultrice ipsum aliquam congue

Sodales tempor sapien quaerat congue eget ipsum laoreet turpis neque auctor vitae eros dolor luctus placerat magna ligula cursus and purus pretium